PInvoking DirectX from Silverlight

Before moving on to Windows 8 development, I decided to write some legacy software. Well actually, this legacy software is perfectly up-to-date Windows 7 level software; tricks presented here will be useful for years to come. It’s just that Windows 8 (Consumer Preview) provides standard solutions to the problems solved here. This blog post discusses the use of a DirectX application, packaged as a DLL, by a Silverlight application, via PInvoke.

The problems tackled here stem from the desire to have Rich Internet Applications (RIAs) for Windows, that use computational resources on the client computer. In particular DirectX for 3D-graphics, X3dAudio, for 3D-audio, and also the GPU (Graphics Processing Unit – a powerful, highly parallel processor). Silverlight provides the facilities to write RIAs, but has a somewhat outdated 3D-graphics library: a subset of XNA – a managed wrapper for DirectX9 (but we want DirectX11, at least!). This Silverlight 3D-graphics library is not very extensive, it lacks e.g. 3D-audio.

On the other hand, Silverlight does provide facilities for interoperability with native code, e.g. by means of PInvoke: the invocation of native code in Dynamic Link Libraries (DLLs). PInvoke is here the bridge between Silverlight and DirectX code.

This blog post presents:

- A sample DirectX11 application, and its transformation into a DLL to be used from Silverlight.

- A Silverlight application that calls methods in the dll.

- How to install and uninstall the DLL, and how to manage its lifetime explicitly, so the DLL may be uninstalled by the Silverlight application itself.

- Performance aspects of the Silverlight-DirectX application, and a comparison with a Silverlight application that uses the Silverlight 3D-graphics library for the same task.

- Concluding remarks, for one thing that this application should have had 3D-audio to decisively mark the advantage of the approach presented here (but at some point, you just have to round up).

The DirectX 11 Sample Application

The DirectX 11 Tutorial05 sample application will serve as the application a user wants to run on his or hers PC, and that uses resources already present on that PC. This DirectX application is the most simple application that contains some animation, and it has also a part – the small cube – that we can multiply in order to generate data for various performance loads.

To that end we transform it into a DLL with as much unnecessary functionality stripped, and an adequate interface added, including the code to transfer the data we need in the Silverlight application. Let’s take a look at the main changes.

Minimizing Window Management Code

For starters, We do not need a window, we use the DirectX application only to compute the 3D-graphics we present in the Silverlight application. The wWinMain (application entry point) function now looks like this:

Sample code like above is entered into the text as pictures. If you would like to have the code, just leave a comment on this blog with an e-mail address and I will ship it to you.

The function has no “Windows” parameters any more, nor has it a main message loop. The InitWindow function has been reduced to:

We do need to create a window in order to create a swap chain, and only for that reason, so we keep it as simple and small as possible. Note that the wcex.lpfnWndProc is assigned the DefWindowProc. That is: the application has no WindowProc of its own.

Create Texture to be Used in Export

In order to export the 3D-graphics data, an additional texture (a texture is a pixel array) called g_pOutputImage is created in the InitDevice function:

This texture has usage “Staging”, so the CPU can access it, and we specified CPU access as “Read”. With these settings we can’t bind the texture to the DeviceContext anymore, so no BindFlags. Note that we cannot have a texture that the GPU writes to, and the CPU reads from. If that would have been possible we could have had a data structure that both DirectX and Silverlight could have used directly. Since this is impossible we will have to perform expensive copy operations. Alas.

A final change in this same function is that we do not release the pointer to the back buffer, but keep it alive in order to export the graphics data in the Render function.

Rendering 3D-Graphics

The Render function has a loop added so we can have multiple small cubes. The idea is to compute a World matrix for each additional small cube. That is, we have only one cube, but draw it multiple times at different locations. Like this:

and:

Converting and Exporting 3D-Graphics Data

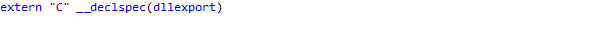

Finally, we want to copy the 3D-graphics data into an array the Silverlight client has provided, so that the client can show it to the user. This is done like so:

The above is standard code, I obtained it around here (the direct link seems broken). The ConvertToARGB function, however is a custom addition, replacing the memcpy call (more about that in the section on performance). This ConvertToARGB converts the RGBA format of DirectX to the premultiplied (PM) ARGB format used in Silverlight. This PM ARGB format is considered legacy now. The conversion step is a real performance hit as anyone can imagine. The function looks like this:

Essentially this OR-s 4 integers, the first one is constructed by byte-shifting the A (transparency) byte all to the left, then 3 integers are created by pushing the RGB bytes in place. This is a fast algorithm since shifting is a quick operation. I found it here. After the conversion, the pixels are in the correct format in an array that is owned by the Silverlight client application.

The DLL Interface

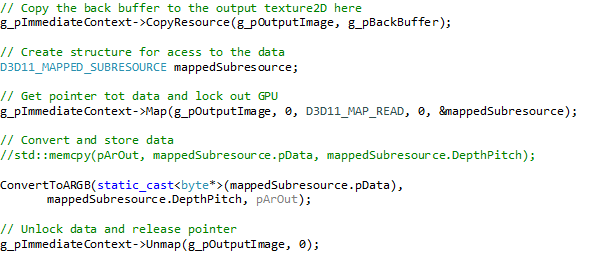

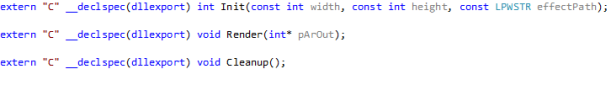

The interface has the following methods:

And for performance measurements:

The above functions return an average time over the Render function, and an average time over the conversion and export respectively. Details will be discussed below. The

decoration results in a clean export of the function names. Without the decoration, the C++ compiler will add a number of tokens (among which at least a few like @#$%^&*) to the function name in order to make it unique. The problem with this is that you’ll have a hard time retrieving the actual function name for use in the Silverlight client.

decoration results in a clean export of the function names. Without the decoration, the C++ compiler will add a number of tokens (among which at least a few like @#$%^&*) to the function name in order to make it unique. The problem with this is that you’ll have a hard time retrieving the actual function name for use in the Silverlight client.

The Silverlight Client

General Architecture

The application has the following structure:

The App class is the application entry point (as usual). The Application_Startup event handler, depicted below,

first checks if the application is running out-of-browser (OOB). Running OOB is the intended normal use of this application. If so, a MainPage control is instantiated which will run the DirectX code. If the application is running in-browser, it still needs to be installed. Only after installation, the application has access to the file system – required to save and load the dll – and to the GPU. The application requires Windows 7 or higher and bails out if a lower level Windows or Apple OS is found.

The install page offers to install the application on the user’s PC, as depicted below,

or tells the user that the application is already installed, and hints at ways to uninstall the application if so desired.

If the user installs the application, it starts running out of browser and shows the MainPage with the DirectX animation.

Installing, Uninstalling, and Managing DLL Lifetimes

Installing includes saving the DirectX application in the DLL to a file on the user’s PC. The DLL is packaged with the Silverlight application as a resource. For execution, the DLL has to be loaded in memory, or be present on the PC as a file. Saving the DLL to file is done with code after an example from the NESL application. We store the application at “<SystemDrive>ProgramDataRealManMonths PInvokeDirectXTutorial05”.

Once the DLL is saved to file we load it into memory using the LoadLibrary function from the kernel32.dll. The reason we manage the dll’s lifetime explicitly instead of implicitly by importing the dll, and calling its functions, is that we need to be able to explicitly remove the dll from memory when exiting the application, see below. Loading into memory requires a dll import declaration:

And a call of this function, in the MainPage_Loaded event handler:

And a call of this function, in the MainPage_Loaded event handler:

Where DllPath is just the path specified above. Is that all? Yes, that’s all.

Where DllPath is just the path specified above. Is that all? Yes, that’s all.

When the application is exited, we use the handleToDll to release the library with repeated calls to FreeLibrary. Declaration:

Then we call it in the Application_Exit event handler as follows:

The point is that each method we import from the dll increases the reference count. As long as the reference count is larger than zero we cannot unload the DLL, nor delete its file. Not being able to delete the file means we cannot properly uninstall the application – we would leave a mess. Once the ref count is zero, FreeLibrary unloads the library from memory.

The final question in this section is why we delete the dll file every time we exit the application, and create the file every time we start it up. The reason is that if we do not do that, and the user uninstalls the application from the InstallPage (running in-browser), the application does not have the permissions to access the file system, hence the DLL file will not be deleted. So, all these file manipulations are bound to the runtime of the application in order to have a clean install and uninstall experience for the user.

PInvoking the DirectX Functions

Now that the application can be installed, functions from the DirectX application interface can be declared and executed.

[DllImport(DLL_NAME, SetLastError = true, CallingConvention = CallingConvention.Cdecl)]

public extern static int Init(int width, int height,

[MarshalAs(UnmanagedType.LPWStr)] String effectFilePath);

[DllImport(DLL_NAME, SetLastError = true, CallingConvention = CallingConvention.Cdecl)]

public extern static void Render([In, Out] int[] array);

[DllImport(DLL_NAME, SetLastError = true,CallingConvention = CallingConvention.Cdecl)]

public extern static int Cleanup();

[DllImport(DLL_NAME, SetLastError = true, CallingConvention = CallingConvention.Cdecl)]

public extern static void GetRenderTimerAv(ref double pArOut);

[DllImport(DLL_NAME, SetLastError = true, CallingConvention = CallingConvention.Cdecl)]

public extern static void GetTransferTimerAv(ref double pArOut);

We make a call to the Init function in the MainPage_Loaded event handler, calls to the dll Render function, in the local Render method, and a call to CleanUp in the Application_Exit event handler.

Calls to the timer functions are made when the user clicks the “Get Timing Av” button on the MainPage.

Debugging PInvoke DLLs

At times you may want to trace the flow of control from the Silverlight client application into the native code of the DLL. This, however is not possible in Silverlight. Silverlight projects have no option to enable debugging native code. Manually editing the project file doesn’t help at this point. Now what?

A work around is to create a Windows Presentation Foundation (WPF) client. I did this for the current application. This WPF application does not show the graphics data the DirectX library returns, it just gets an array of integers.

To trace the flow of control into the DLL you need to uncheck ”Enable Just My Code (Managed only)” at (in the menu bar) Tools | Options| Debugging, and to check (in the project properties) “Enable native code debugging” at Properties | Debug | Enable Debuggers.

If you now set a breakpoint in the native code and start debugging from the WPF application, program execution stops at your breakpoint.

Reactive Extensions

In order to have a stable program execution, the calls to the dll’s Render method are made on a worker thread. We use two WriteableBitmaps, one is returned to the UI thread upon entering the Silverlight method that calls the dll’s Render method, the other WriteableBitmap is then rendered to by the DLL. After rendering, the worker thread pauses to fill up a time slot of 16.67ms (60 fps).

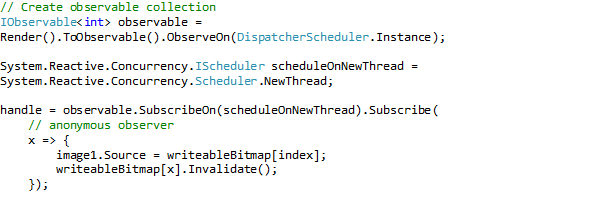

Thread management and processing the indices that point into the WriteableBitmap array (implementation detail J ) is done using Reactive Extensions (RX). The idea is that the stream of indices the method returns is interpreted by RX as an Observable collection and ‘observed’ such that it takes the last index upon arrival, and uses the index to render the corresponding WriteableBitmap to screen. This results in elegant and clean code, as presented below.

The first statement create an observable collection from a method that returns an IEnumerable. Note that ‘observing’ is on the UI thread (referred to by the ‘DispatcherScheduler’)

The SubscribeOn(ScheduleNewThread)-clause creates a new thread for the render process. The lambda expression defines the action if a new int (index) is observed.

Rendering on the worker thread proceeds as follows:

To stop rendering we just put IsRunning to “false”. And that’s it.

Performance

DirectX applications – by definition – have higher performance than .Net applications. However, if you pull out the data from a DirectX application and send it elsewhere, there is a performance penalty. You will be doing something like this:

CPU -> GPU -> CPU -> GPU -> Screen instead of CPU -> GPU -> Screen

The extra actions: copying data from the GPU to CPU accessible memory and converting to Premultiplied ARGB will take time. So the questions are:

- How much time is involved in these actions?

- Will the extra required time pose a problem?

- How does performance compare to the Silverlight 3D-graphics library?

- Are there space (footprint) consequences as well?

Before we dive into answering the questions, note that:

– The use of DirectX will be primarily motivated by the need to use features that are not present in the Silverlight 3D-Graphics / -Audio library at all. In such cases comparative performance is not at all relevant. Performance is relevant if the use of DirectX becomes prohibitively slow.

For the measurements I let the system run without fixed frequency; usually you would let the system run at a frequency of 60Hz, since this is fast enough to make animations fluent. At top speed, the frequency is typically around 110Hz. I found no significant performance differences between debug builds and release builds.

Visual Studio 11CP Performance analysis: Sampling

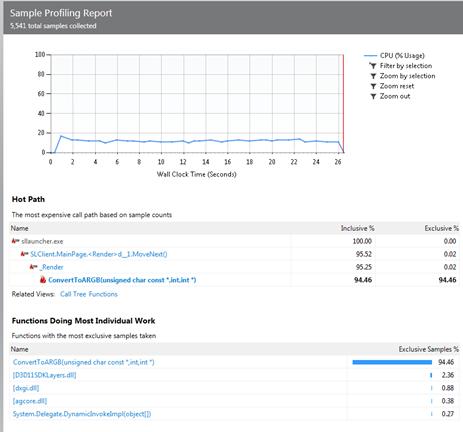

If we run a sampling performance analysis – this involves the CPU only, the bottleneck in the process becomes clear immediately: The conversion from RGBA to premultiplied ARGB (and I’m not even pre-multiplying) takes 96.5% of CPU time.

It is, of course, disturbing that the bulk of the time is spent in some stupid conversion. On the other hand, work done by the GPU is not considered here.

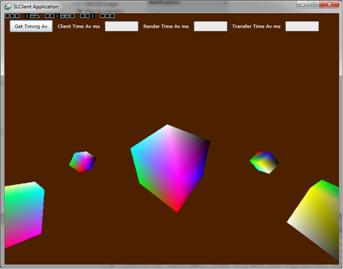

To investigate the contribution of the conversion further, I replaced the conversion by a memcpy call. Then we get a different color palette J, like this:

But look, the frequency jumps up to 185 fps (80% more). The analysis then yields:

That is: much improved results, but shoving data around is still the main time consumer. Note that the change of color palette by the crude reinterpretation of the pixel array is a problem we could solve at compile time, by pro-actively re-coloring the assets.

Compare to a Silverlight 5 3D-library application

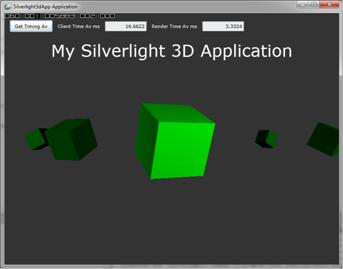

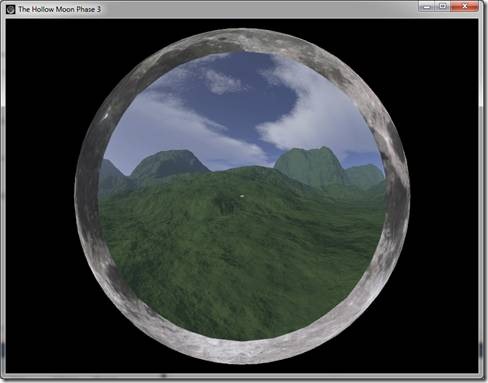

Would the performance of our application hold up to the performance of a Silverlight application using the regular 3D-graphics library? To find out I transformed the standard Silverlight 3D-graphics starter application to a functional equivalent of our Silverlight-DirectX application, as depicted below – one large cube and 5 small cubes orbiting around it (yes, one small cube is hidden behind the large one).

If we click the “Get Timing Av button”, we typically get a “Client Time Average” (average time per Draw event handler call) of 16.6.. ms, corresponding to the 60 fps. The time it takes to actually render the scene averages to 3.3 ms. This latter time is 0.8ms without conversion, and 2.8ms with conversion for the Silverlight – DirectX application (if we let it run at max frequency). So, the Silverlight-DirectX alternative can be regarded as quicker.

If we look at the footprint, we see that the Silverlight-DirectX application uses 1,880K of video memory, and has an image of 50,048K in the Task Manager. The regular Silverlight application uses 5,883K of video memory, and has a 37,708K image. Both in SLlauncher. So, the regular Silverlight application is smaller.

Concluding Remarks

For one, it is feasible to use DirectX from Silverlight. PInvoke is a useful way to bridge the gap. This opens up the road to use of more, if not all, parts of the DirectX libraries. In the example studied here, the Silverlight-DirectX application is faster, but has a larger footprint.

We can provide the user with a clean install and uninstall experience that covers handling and lifetime management of the native dll.

Threading can be well covered with Reactive extensions.

There is a demo application here. This application requires the installation of the DirectX 11 and the Visual C++ 2010SP1 runtime packages (links are provided at the demo application site). I’ve kept these prerequisites separate, instead of integrating their deployment in the demo application installation the NESL way, mainly because the DirectX runtime package has no uninstaller.

If you would like to have the source code for the example program, just create a comment on this blog to request for the source code, I’ll send it to you if you provide an e-mail address.

![clip_image002[7] clip_image002[7]](https://marcdrossaers.files.wordpress.com/2012/01/clip_image0027_thumb.jpg?w=543&h=426)